How Anomaly Detection Improves Threat Prediction Accuracy

Modern cyber threats demand smarter defenses. Signature-based systems can’t keep up with zero-day exploits or stealthy attacks. That’s where anomaly detection steps in, using machine learning to identify unusual behavior that traditional tools miss.

Key takeaways:

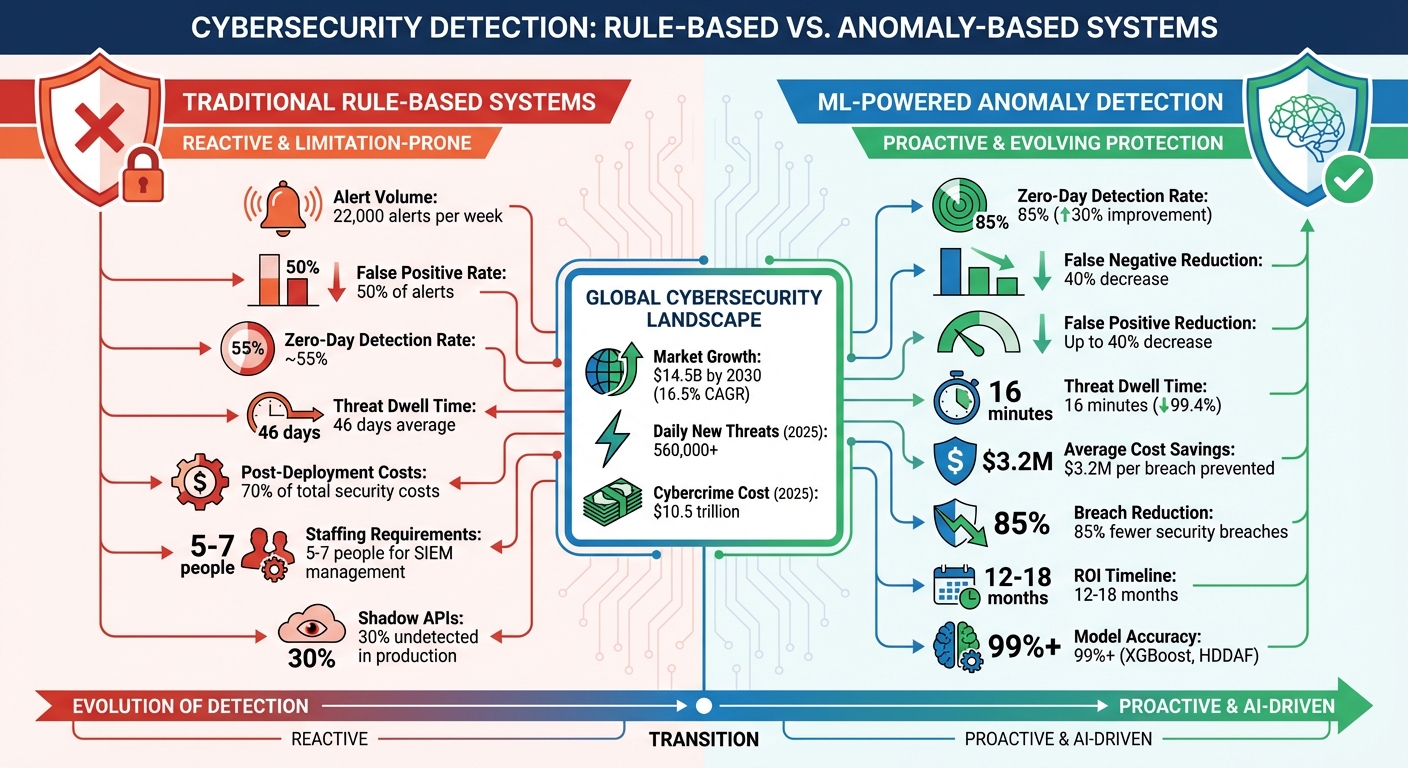

- Why it matters: Missed threats lead to breaches, downtime, and financial losses. Over 50% of security alerts are false positives, overwhelming teams.

- How it works: Machine learning builds a baseline of normal activity, flags deviations, and adapts to new threats. It improves zero-day detection rates (from ~55% to 85%) and cuts false negatives by 40%.

- Benefits: Faster threat detection (from 46 days to 16 minutes), reduced costs ($3.2M average savings), and fewer false positives.

Anomaly detection isn’t just a better tool – it’s a smarter way to stay ahead of evolving cyber threats.

Rule-Based vs Anomaly Detection: Performance Metrics and Cost Comparison

Detecting Security Threats and Anomalies Using Data Science

sbb-itb-9b7603c

Problems with Rule-Based Threat Detection

Rule-based and signature-based detection systems rely on a straightforward concept: they compare incoming traffic to a database of known attack patterns. While this method can handle documented threats effectively, it has serious limitations that attackers exploit. These flaws highlight why more adaptive and forward-looking detection strategies are crucial.

Alert Fatigue and False Positives

Security teams are inundated with alerts – 22,000 every week on average – and nearly 50% of these are false positives, leaving analysts overwhelmed and increasing the chances of missing genuine threats.

"The average SOC faces 22,000 alerts a week – and nearly half are false positives. Analysts are overwhelmed, detection rules are outdated, and real threats are slipping through." – AttackIQ

False positives happen when normal activities resemble attack patterns. For instance, a system might flag all Dropbox links as malicious because they are often used in phishing emails, even though employees rely on them for legitimate file sharing. To reduce these errors, teams often use manual fixes like blocklists or safelists. While these measures are easy to implement, they can pile up over time, creating technical debt as the organization grows.

And it’s not just about the sheer volume of alerts. Rule-based systems also struggle to detect new and unknown threats.

Missing Zero-Day Threats

Rule-based systems can only catch threats already cataloged in their signature databases, leaving organizations exposed to zero-day attacks that exploit vulnerabilities no one has documented yet.

"The primary limitation of [signature-based] approach is that it is reactive. It can only detect threats that have already been identified and added to the signature database. It is utterly ineffective against new, previously unseen threats." – Palo Alto Networks

Attackers take advantage of these gaps by using techniques designed to bypass static detection rules. For example, they employ "low-and-slow" traffic patterns or polymorphic toolchains that constantly evolve. They also use "living-off-the-land" tactics, which involve legitimate system tools and credentials to blend in with normal activity, rarely triggering alarms. Adding to the challenge, over 30% of APIs in production environments are "shadow APIs" – undocumented and unmanaged, making them invisible to traditional detection tools.

These problems not only increase security risks but also drive up costs for organizations.

Financial and Operational Costs

The expenses tied to rule-based systems extend well beyond their initial setup. In fact, 70% of security costs occur after deployment, covering ongoing maintenance, updates, and monitoring. Large organizations often need a team of five to seven people just to manage legacy SIEM systems.

Because these systems are reactive, they require constant manual updates to address emerging threats, which drains resources and leaves little time for proactive threat hunting. Meanwhile, attackers are moving faster – the time between discovering a vulnerability and exploiting it is now down to just minutes, making manual rule updates far too slow. Companies that implement real-time threat detection can save an average of $3.2 million in potential breach-related costs, emphasizing the financial strain caused by delayed detection and missed threats.

How Anomaly Detection Improves Threat Prediction

Anomaly detection takes a forward-thinking approach to identifying potential threats. Here’s how it establishes behavioral baselines and adapts to evolving threat patterns.

Building Behavioral Baselines

Machine learning models dive into historical data to identify what "normal" looks like in your environment. They analyze factors like user login habits, access locations, data transfer patterns, and communication flows between network hosts. For wireless networks, these systems also monitor technical metrics like RSSI (Received Signal Strength Indicator), SNR (Signal to Noise Ratio), and Path Loss, creating a detailed profile of typical activity.

Once these baselines are set, the system compares incoming real-time data to detect unusual deviations. For instance, if an employee who usually logs in from New York during standard office hours suddenly accesses sensitive files from Eastern Europe at 3:00 AM, the system flags this as a high-priority anomaly – even if the behavior doesn’t match any known attack patterns.

These baselines allow machine learning models to spot even the most subtle threats that might otherwise go unnoticed.

Using Machine Learning Algorithms

Different machine learning methods handle various detection challenges. Techniques like clustering and isolation forests excel at identifying threats that deviate from normal patterns, even when no prior examples exist. In performance comparisons, XGBoost achieved an impressive 0.99 accuracy rate, outperforming other models.

"AI-driven detection can reduce false positives by up to 40 percent while shortening the time required to recognize novel threats." – Rapid7

Dynamic graph modeling takes detection to the next level by analyzing the evolving relationships between network hosts. Rather than just flagging isolated events, these systems identify abnormal communication sequences across the network. For example, in December 2024, researchers at SIMAD University used a Naive Bayes-based framework to analyze the 2022 CISA Known Exploited Vulnerabilities catalog, achieving a 0.9810 accuracy rate in detecting vulnerabilities.

Adapting to New Threats

Modern anomaly detection systems don’t just stop at initial detection – they continuously refine themselves to address emerging threats. Unlike static, rule-based systems, these models evolve by learning from fresh data. For example, frameworks like HDDAF (Hybrid Drift Detection and Adaptation Framework) effectively handle concept drift, which refers to shifts in normal behavior caused by system changes or new attack methods. HDDAF achieved a macro F1 score over 99% on the CIC-IDS2017 dataset, maintaining strong performance even in fast-changing data streams.

In February 2025, researchers introduced the APT-LLM framework, which combines large language models like DistilBERT with autoencoders to detect Advanced Persistent Threats (APTs). This system successfully identified stealthy threats that mimicked normal behavior, even when those threats accounted for just 0.004% of the dataset. The ability to adapt and learn from minimal examples is what sets modern anomaly detection apart from older, static methods.

Benefits of Anomaly Detection for Organizations

Anomaly detection systems tackle the challenges of alert fatigue and delayed responses that often plague rule-based systems. These tools not only enhance speed but also significantly cut costs and reduce risks for organizations.

Faster Detection and Response Times

In cybersecurity, speed can make all the difference between containing a threat and suffering a major breach. Modern anomaly detection systems monitor network traffic, system logs, and transaction data in real time, instantly spotting unusual patterns. This eliminates the delays of traditional signature-based systems, where teams wait for vendor updates to address new threats.

"Speed determines success in modern cybersecurity operations. Attackers need only seconds to exploit vulnerabilities, while organizations often wait days or weeks for patches." – Kriti Awasthi, Author, Fidelis Security

With machine learning, threat dwell time can drop dramatically – from 46 days to just 16 minutes. These systems can also trigger automated responses, such as quarantining suspicious activity before it spreads. By using intelligent filtering, they reduce false positives, allowing security teams to focus on the most critical threats.

Reduced Costs and Risk

Early detection doesn’t just save time – it also saves money. Organizations using advanced anomaly detection report an 85% reduction in security breaches, while live threat detection capabilities save an average of $3.2 million in potential breach costs. Most companies see a return on their investment in anomaly detection systems within 12 to 18 months.

These systems also cut costs by automating repetitive tasks. Unlike human analysts, AI-driven tools provide 24/7 monitoring without fatigue, ensuring constant vigilance – even during weekends and holidays. By acting as force multipliers, they free up security teams to focus on more complex investigations and strategic threat hunting. Early detection also minimizes operational disruptions, reducing downtime, emergency response costs, and ensuring business continuity.

Examples of Detected Threats

The practical benefits of anomaly detection are clear from the types of threats it can uncover. These systems excel at identifying issues that traditional defenses might miss. For instance, they can flag unusual login behavior, such as when a core Windows process like sppsvc.exe makes unexpected outbound connections or loads unauthorized DLLs. They can also detect abnormal data transfers, like an employee account suddenly exfiltrating large volumes of sensitive files during off-peak hours.

Advanced frameworks analyze "provenance data", revealing subtle lateral movements by mapping relationships between processes, files, and network connections. Even in datasets where Advanced Persistent Threats (APTs) make up just 0.004% of activity, specialized models can spot these stealthy threats. Additionally, they detect undocumented shadow APIs by identifying unusual usage patterns.

Platforms like The Security Bulldog (https://securitybulldog.com) harness these capabilities, enabling organizations to quickly identify, analyze, and respond to emerging cyber threats. This not only strengthens security but also boosts operational efficiency.

Best Practices for Implementing Anomaly Detection

To fully leverage the advantages of anomaly detection, it’s crucial to focus on selecting the right tools, ensuring data quality, and integrating solutions seamlessly into your security ecosystem. By following these best practices, you can refine your anomaly detection approach to stay ahead of emerging threats.

Selecting an Anomaly Detection Solution

When choosing an anomaly detection platform, scalability and compatibility with frameworks like the Open Cybersecurity Schema Framework (OCSF) should be top priorities. This ensures smooth data normalization across tools like SIEM, SOAR, and XDR systems.

Platforms equipped with white-box models or Explainable AI (XAI) tools, such as SHAP or LIME, can provide clarity on alert triggers. For instance, they can highlight anomalies like a login attempt from two different countries within an hour.

Performance matters too. Opt for solutions capable of processing large datasets without delays. With the anomaly detection market projected to hit $14.5 billion by 2030, growing at 16.5%, it’s clear that demand for high-performance tools is surging.

Additionally, look for platforms that offer automated retraining and drift monitoring. Hybrid models – combining statistical methods for quick triage with machine learning for complex attack patterns – strike a good balance between speed and depth.

Maintaining Data Quality and Model Accuracy

Accurate anomaly detection relies on clean data and regular updates to detection models. Raw security logs often contain duplicates, gaps, and inconsistencies, making multi-stage preprocessing essential to filter noise and standardize inputs.

Focus on high-impact features like login frequency, device location, and IP reputation. Use statistical tests – such as t-tests, ANOVA, or chi-squared tests – to monitor for shifts in data that might indicate concept or feature drift. Feature reduction techniques can also streamline processes, cutting features by 50% (e.g., from 42 to 21) with minimal impact on performance.

A notable example comes from researchers at the University of Málaga, who introduced the Hybrid Drift Detection and Adaptation Framework (HDDAF) in 2025. Using Hoeffding drift detection, their framework maintained a macro F1 score above 99% on the CIC-IDS2017 dataset, balancing fine-tuning with full retraining.

Incorporating a human-in-the-loop (HITL) process can further enhance detection accuracy. By having humans label alerts, models can be continuously fine-tuned. To address data imbalance, techniques like SMOTE can ensure that rare but critical attack types are adequately represented.

Integrating with Existing Security Tools

Effective integration amplifies the value of anomaly detection by enabling data correlation across your security infrastructure. By connecting anomaly detection with tools like SIEM, SOAR, UEBA, endpoint detection, and threat intelligence platforms, you can create a unified security view. This approach transforms isolated anomalies into high-confidence, multi-stage incident reports, reducing alert fatigue and speeding up threat response.

Behavioral baselines are another key element, helping to identify significant deviations from normal activity. Tools like Kusto Query Language (KQL) can filter logs at the source, ensuring alerts are triggered only by relevant data. AI-driven log filtering and deduplication can cut alert volumes by as much as 50%.

For example, platforms like The Security Bulldog excel in this area. Their proprietary NLP engine distills open-source cyber intelligence and integrates with existing SOAR and SIEM tools. This allows analysts to trace anomalies back to their root causes, rather than treating them as isolated events.

Before deploying new configurations, always test them in a sandbox environment. This minimizes the risk of disrupting live workflows. Additionally, using watchlists of known benign entities can help further reduce noise. With fewer than 50% of security operations teams effectively integrating Cyber Threat Intelligence (CTI) into incident investigations, proper integration can provide a critical edge.

Conclusion

Anomaly detection has reshaped how organizations handle cybersecurity, moving the focus from reacting to threats after they occur to actively preventing them. Traditional systems are limited to recognizing known threats, leaving networks exposed to zero-day attacks and unfamiliar attack patterns. Anomaly detection, on the other hand, establishes behavioral baselines and identifies deviations in real time, making it possible to detect emerging threats as they happen.

Advanced systems using machine learning have shown outstanding performance, with some achieving macro F1 scores exceeding 99% on benchmark datasets. These results emphasize the effectiveness of machine learning in modern threat detection.

"Attackers are outpacing human response, and traditional methods are insufficient." – Joan Nneji, Panaseer

The numbers are staggering: by 2025, over 560,000 new cyber threats will be detected daily, and the cost of cybercrime is expected to hit $10.5 trillion. This makes it clear that outdated defense methods can no longer keep up. Anomaly detection systems, powered by machine learning, adapt as networks grow and attacker strategies evolve. For instance, during the 2024 MOVEit supply chain attack, AI-driven anomaly detection systems flagged unusual data transfers before signature-based systems could even respond. This early warning gave organizations critical time to act.

These real-world examples highlight that anomaly detection is far more than a theoretical upgrade – it’s an essential tool for staying ahead in the ever-changing cybersecurity landscape.

FAQs

What data do anomaly detection models need to learn ‘normal’ behavior?

To effectively identify deviations, anomaly detection models need data that reflects standard operations. This data often includes network traffic patterns, user activity logs, system events, and communication sequences. By examining these inputs, the models can create a baseline of what ‘normal’ behavior looks like and flag irregularities that might signal potential threats.

How do these systems handle changing user behavior without breaking alerts?

These systems rely on flexible mechanisms to keep up with changing user behavior without raising unnecessary alarms. Techniques like drift detection, adversarial training, and incremental learning allow them to adapt to data shifts as they happen. On top of that, methods such as pseudo-labeling, knowledge distillation, and active learning fine-tune detection accuracy on the fly. This approach helps maintain strong performance as user patterns and threats shift, while reducing false positives and missed warnings.

What’s the fastest way to integrate anomaly detection into SIEM/SOAR?

The fastest way to incorporate anomaly detection into SIEM or SOAR systems is by leveraging pre-built analytics tools and rules. For example, platforms like The Security Bulldog simplify this process through automation and natural language processing (NLP), boosting detection efficiency. Many SIEM and SOAR platforms also provide pre-configured anomaly detection apps or rules, which can be tailored to your specific data sources. This approach allows for quicker setup and more effective threat detection.